Markov Chains

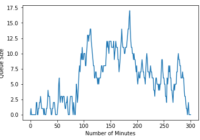

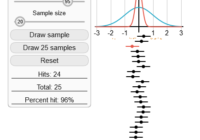

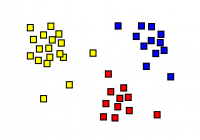

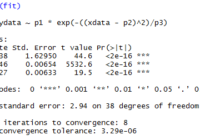

Markov chains are a way of stochastically modelling a series of events where the outcome probability of an event depends only only on the event that preceded it. This post gives an overview of some of the theory of Markov chains and gives a simple example implementation using python. Using Markov Chains to Model The… Read More »